Besides lots of family time and the creation of this blog/website, this is what I’ve been thinking about over the winter break.

Background

As part of my research in emergent reducibility, I’ve had to face a binary classification situation with severe class imbalance. Among brute-force searches, it seems that there’s roughly 1 case of emergent reducibility (what I’m looking for) for every 1 million irreducible cubic polynomials. It is known that there are infinitely many cubic polynomials with emergent reducibility.

One standard way of dealing with class imbalance is to artificially increase the incidence of positive cases in the training data, but I’ve seen very little about how to decide how much to adjust the ratio of the two classes - that’s what this post is about.

Training Data

To examine the relationship of class imbalance on several classifiers, I build 21 training sets each with the same 52 cases of emergent reducibility and between 500 and 2500 (by 100 increments) polynomials without emergent reducibility. Each training set was used to train a variety of logristic regression, random forest, naive Bayes, and k-nearest neighbor models via caret.

Confusion Matrices

Once the models were trained, they were all tested against the same data set with 23 cases of emergent reducibility (no overlap with training data) and 8000 cases without emergent reducibility. For each model and training set combination, a confusion “matrix” was build, this is in the file confMats.csv. Let’s read that into R and add another variable, mdlType that’s either logistic, RF, or other. This is to facet some graphs later.

confMats <- read.csv("../../static/post/confMats.csv", header=TRUE)

logLocations <- grep("lr", confMats$mdl)

rfLocations <- grep("rf", confMats$mdl)

confMats$mdlType <- vector(mode="character", length=length(confMats$mdl))

confMats[logLocations,]$mdlType <- "Logistic"

confMats[rfLocations,]$mdlType <- "RF"

confMats[!(1:length(confMats$mdl) %in% c(logLocations,rfLocations)),]$mdlType<-"Other"ROC Plots

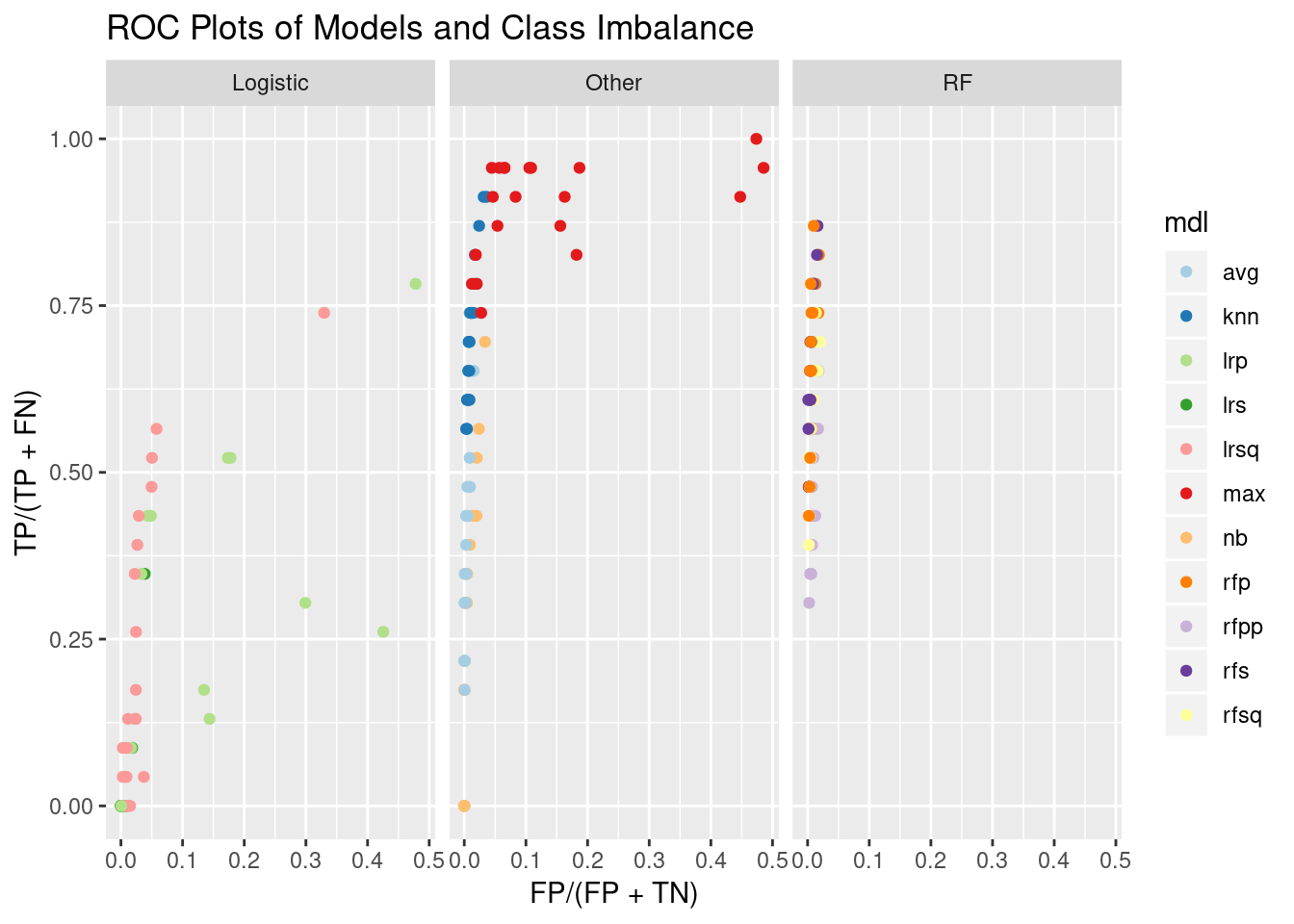

Now we’ll plot our confusion matrices in ROC space, each point is a model and training set combo. I’ve facetted by model type for readability.

library(ggplot2)

#11 distinct colors, courtesy of colorbrewer2.org

cb11<-c('#a6cee3','#1f78b4','#b2df8a','#33a02c','#fb9a99','#e31a1c','#fdbf6f','#ff7f00','#cab2d6','#6a3d9a','#ffff99')

ggplot(confMats,aes(x=FP/(FP+TN),y=TP/(TP+FN),col=mdl))+geom_point()+facet_wrap(~mdlType)+scale_color_manual(values=cb11)+ggtitle("ROC Plots of Models and Class Imbalance ")

The model max seems to find the most, but this simply marks a polynomial as having emergent reducibility if any other model says it does. This indicates some models find cases that others miss (I have some nice heatmaps showing this also, for another day). The logistic regression models have much more irregular variation than I was expecting.

To see how varying the number of non-emergent reducibile polynomials impacts performance, I’ll throw in some animation:

library(gganimate)

pathPlot <- ggplot(confMats,aes(x=FP/(FP+TN),y=TP/(TP+FN),col=mdl,frame=ner))+geom_path(aes(cumulative=TRUE, group=mdl))+facet_wrap(~mdlType)+scale_color_manual(values=cb11)+ggtitle("Animated ROC Paths")

gganimate(pathPlot, "../../static/post/pathPlot.gif")I’m saving the gif and then displaying it outside the code chunk. This is because animated graphs seem to be turned pink inside code chunks.

pathPlot.gif

The random forest and knn models seem pretty stable as the number of non-emergent reducible case changes. Looking at the number of true positives we see a gradual decline as ner increases:

library(knitr)

nerRF.tab <- xtabs(TP~ner+mdl, data=confMats[confMats$mdl %in% c("rfs","rfp","rfpp","rfsq","knn"),], drop.unused.levels = TRUE)

kable(nerRF.tab)| knn | rfp | rfpp | rfs | rfsq | |

|---|---|---|---|---|---|

| 500 | 21 | 19 | 15 | 20 | 16 |

| 600 | 21 | 17 | 13 | 19 | 15 |

| 700 | 20 | 20 | 13 | 18 | 17 |

| 800 | 19 | 17 | 13 | 18 | 15 |

| 900 | 18 | 18 | 10 | 18 | 17 |

| 1000 | 18 | 16 | 11 | 16 | 14 |

| 1100 | 17 | 17 | 12 | 16 | 14 |

| 1200 | 17 | 17 | 12 | 18 | 16 |

| 1300 | 17 | 18 | 10 | 15 | 14 |

| 1400 | 17 | 13 | 10 | 16 | 12 |

| 1500 | 14 | 13 | 11 | 14 | 10 |

| 1600 | 15 | 15 | 10 | 15 | 13 |

| 1700 | 16 | 16 | 9 | 16 | 13 |

| 1800 | 15 | 15 | 9 | 14 | 12 |

| 1900 | 16 | 13 | 9 | 14 | 13 |

| 2000 | 14 | 10 | 8 | 14 | 10 |

| 2100 | 14 | 11 | 9 | 13 | 12 |

| 2200 | 13 | 12 | 8 | 11 | 13 |

| 2300 | 16 | 11 | 9 | 13 | 9 |

| 2400 | 13 | 11 | 7 | 11 | 9 |

| 2500 | 14 | 11 | 8 | 11 | 11 |

The logistic regression models show the odd variation:

TPnerLR.tab <- xtabs(TP~ner+mdl, data=confMats[confMats$mdlType == "Logistic",], drop.unused.levels = TRUE)

kable(TPnerLR.tab)| lrp | lrs | lrsq | |

|---|---|---|---|

| 500 | 10 | 8 | 12 |

| 600 | 8 | 2 | 13 |

| 700 | 10 | 0 | 4 |

| 800 | 3 | 0 | 6 |

| 900 | 0 | 0 | 9 |

| 1000 | 0 | 0 | 11 |

| 1100 | 2 | 0 | 3 |

| 1200 | 2 | 0 | 10 |

| 1300 | 0 | 0 | 1 |

| 1400 | 6 | 0 | 0 |

| 1500 | 12 | 0 | 1 |

| 1600 | 4 | 0 | 3 |

| 1700 | 12 | 0 | 1 |

| 1800 | 7 | 0 | 17 |

| 1900 | 3 | 0 | 0 |

| 2000 | 0 | 0 | 1 |

| 2100 | 0 | 0 | 0 |

| 2200 | 0 | 0 | 2 |

| 2300 | 18 | 0 | 2 |

| 2400 | 0 | 0 | 8 |

| 2500 | 0 | 0 | 0 |

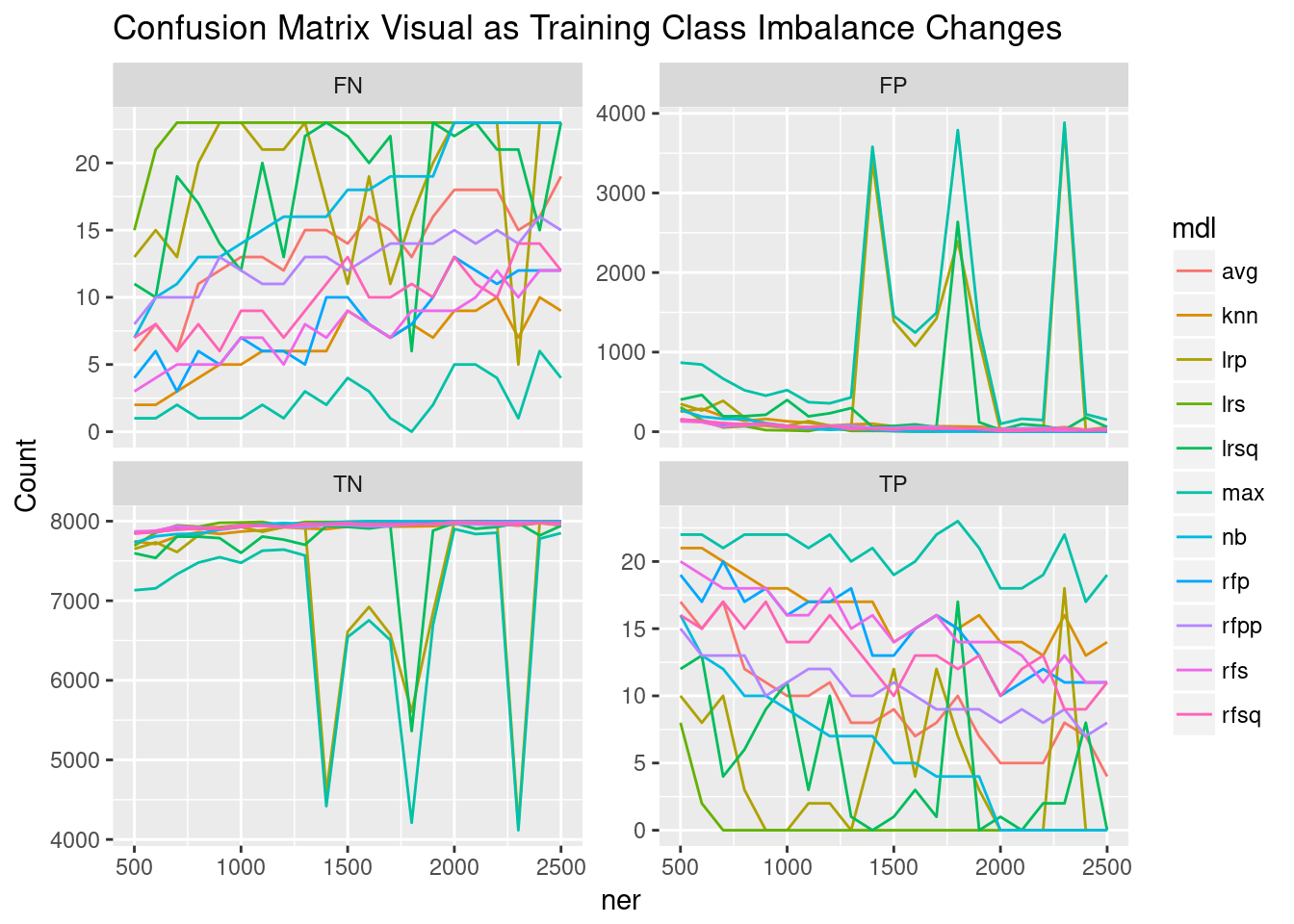

The variation across elements of the confusion matrices is perhaps best seen in the following plot:

library(tidyr)

library(dplyr)

gather(confMats, key=Type, value=Count, -c(ner, mdl, mdlType)) %>% ggplot(aes(x=ner, y=Count, col=mdl))+geom_line()+facet_wrap(~Type, scales = "free_y")+ggtitle("Confusion Matrix Visual as Training Class Imbalance Changes")

Clearly, there’s something in the ner 1500,1700,1800, and 2300 training sets that really helps logistic models but not other model types. This is something to look into.

However, I’m still left wondering What is the best ratio of classes in a training set?